The Tower of Babel Problem: AI Will Scramble Human Communication Channels

This article explores the possibility that, with the emergence of AI that can pass the Turing Test, we may be facing a "Tower of Babel problem," or a situation in which human communication tools become very difficult to use.

It argues that the problem is deeper than "deepfakes," drawing on the mythical story of the Tower of Babel to analogize the potential for agentic AI to cause disruption in human sensemaking systems on a large scale, underscoring the necessity for developing robust new institutional frameworks to manage the risks. The discussion extends to the need for near-term proactive solutions and the longer-term design of adaptive "operating systems" for governance in the AI era, aimed at safeguarding democratic processes and ensuring that technological progress contributes positively to human well-being.

Myths as “Pattern Language”

From the standpoint of modern life and its problems, myths and folklore might at first glance seem archaic, remnants of a pre-scientific age whose relevance is lost in the light of modern empirical understanding. However, is it possible that, at times, these stories play at least some role in cultural sensemaking — perhaps by providing a speculative framework for understanding complex contemporary challenges? A kind of basic "pattern language" that captures the essence of recurring situations and human behaviors?

Skepticism toward ideas and arguments that apply ancient myths to today's issues seem to be both natural and generally warranted, given the profound differences in context and knowledge. Nevertheless, the work of Carl Jung and others has emphasized that there might be a more useful connection. Their work exploring archetypes — universal, symbolic patterns that arguably recur across different cultures and times — hints at underlying psychological motifs that transcend era and geography. This offers the possibility that these archetypes might reveal consistent aspects of human psychology and group dynamics that persist through time.

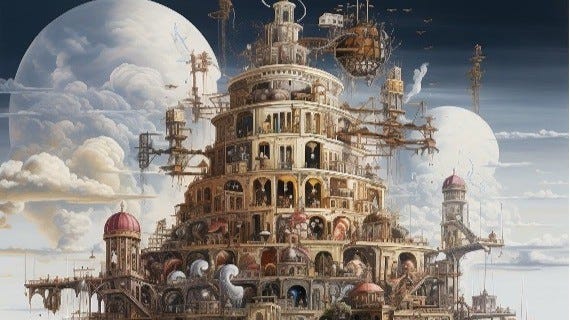

Consider the myth of the Tower of Babel. Perhaps surprisingly, when viewed through a speculative lens, this legend seems to offer an interesting potential allegory for our contemporary questions about AI and its impact on humanity.

In this ancient story, humanity, speaking a single language, comes together to build a tower that would reach the heavens, a symbol of their technological ambition and collective effort. However, their hubris invites divine wrath, resulting in the confounding of their speech and the subsequent scattering of peoples across the earth. The tale underscores the consequences of unchecked ambition and the fragmentation of a system that becomes, arguably, too grandiose for its own good.

This allegory's relevance persists even if one doesn't subscribe to the metaphysical beliefs underpinning the original narrative. It offers a "design pattern" for understanding the potential pitfalls in the pursuit of technological growth and maniacal purpose.

So how could the ancient Tower of Babel narrative possibly find useful parallels in the construction of a modern technology stack?

To begin with, there is an interesting surface parallel between the tower in the myth, an edifice composed of many layers intended to reach the divine, and our technology stack, built from numerous layers of code, algorithms, and interfaces. Each layer adds complexity and capability, and as we climb higher, the stack becomes more powerful and, potentially, more precarious. But the Tower of Babel parallels go deeper.

The Tower of Babel Problem

The allegory really gets interesting when we look more closely at the odd parallels around the disruption of human communication and the organization power. Already, we have begun an ongoing conversation about digital tools for distributing misinformation and the emergence of deepfakes. But how bad could it get?

It is worth remembering that the earliest crop of Large Language Models (LLMs) were apparently intended to be components in AI agent personal assistant systems. For example, internal instructions in Microsoft’s AI-assisted search system suggest the original intention may have been to release an AI personal assistant named Sydney. But artificial agency, the ability to commission AI to directly take on real-world tasks, is not the same thing as traditional artificial intelligence and the current crop of LLMs, which have largely focused on writing and paperwork.

Does it make any difference? Well, consider the near-future possibility of an AI agent with a voice interface. There is no doubt this system could be gainfully used in all kinds of customer-interface situations. But there is no reason it couldn’t also be deployed to run a scam on the elderly -- call everyone 70-years-old or older within a particular zip code, imply it is someone affiliated with law enforcement without actually saying so, factually describe several recent crimes in the area in frightening detail, all before recommending a particular alarm system and asking for a credit card. There is a case to be made that this is legal under today’s laws. Now imagine this kind of manipulation at digital scale.

It’s not actually too difficult to imagine, because we have already seen parallel scenarios take root. For example, even simple recording-driven bots and scammers have managed to make voice lines largely unusable, and platforms like Twitter and Facebook are plagued by bots pushing a range of agendas and crypto scams. And of course Twitter and Facebook have already played a role in destabilizing more than one government.

Cracked AI agents promise to add kerosene to this situation by ultimately making it all but impossible to tell who is human, and who isn’t, in any communication intermediated by a wire. These aren't Skynet's terminators and shoggoth paperclip maximizers -- they're tomorrow's con artists, sales bots, social media manipulators, and crypto scammers, and are potentially capable of undermining human-to-human communications and the institutions that are built on this principle, at a scale we've never seen before.

This is the “Tower of Babel” problem.

That is, it is a situation where the rapid advancement and proliferation of the artificial intelligence layers of the technology stack, particularly in the form of AI agents, have the potential to lead to a breakdown in effective human communication and the potential manipulation or destabilization of societal institutions.

Extending our “Tower of Babel” analogy into a scenario exercise, it could be argued that financial systems, government agencies, and the key individual employees at just about any important company — all are ripe for potential exploitation by agentic AI agents that sound an awful lot like other people. It's a bit like having a new open API to society's most fundamental institutions.

And, as the parable of Babel seems to hint, that could be a very big deal. We like to give the emergence of human intelligence a lot of credit for the amazing leap in human progress over the last 10,000 years or so. But this credit is misplaced. Homo sapiens have been about as smart as they are today for tens of thousands of years. It is the emergence of institutions, not brains, that really seems to have launched things forward. Institutions are the technology that humans developed to allow more positive-sum, longer-term cooperation among individuals. They are arguably the most important technology we have ever created.

But in our Tower of Babel scenario, financial systems, public opinion, government agencies — all are ripe for potential large-scale exploitation by even basic AI agents. Cracked AI agents with convincing, real-time voice capabilities could potentially interface directly with society's most fundamental bureaucratic systems. If our institutional framework were a literal operating system, this is the sort of situation that could see stack overflow errors and system crashes as the legacy systems simply fail to keep up. But it's not just incidental systemic risk that needs to be considered; it's also the empowerment of the kinds of people who want to see traditional institutions fail.

Imagine warlords who wield algorithms instead of (or in addition to) armies. The potential for destabilization and conflict is rife, as agentic AI amplifies the scale of every bad actor with an internet connection. When the technology stack reaches the point where it manifests agentic AI, there is the potential for a megalomaniacal sociopath or geopolitical adversary who has the access to the equivalent of real-world DDOS weapons, capable of overwhelming the individuals and institutions that rely on mere human bandwidths.

It may well be that there is nothing a rogue AI could do that a rogue person somewhere is not likely to try first.

And the inner workings of democratic societies would seem to be particularly vulnerable to the “Tower of Babel problem” because they explicitly rely on a messy “marketplace of ideas” for collective sensemaking. How does democratic deliberation even work in a world where an infinite number of “concerned voices” can be generated at the touch of a button? Even today, I suspect nobody really has any idea how many people on social media are people. The BabelBots are already here, they’re just not evenly distributed.

This isn't without precedent. The early days of industrialization saw similar upheavals, as new technologies tore through established norms and systems. The solution then wasn't to wait for a new breed of better or more enlightened humans adapted to the technological landscape – it was tried and the results weren’t pretty. Rather, the solution was to actively design and construct robust new kinds of institutions capable of channeling these powerful forces toward positive externalities and away from negative externalities.

Looked at a certain way, the parable of the Tower of Babel suggests an interesting conclusion: organizations themselves are a technology -- and they need to be patched well enough to keep up with new challenges or there is a risk of the whole thing falling apart. Certain post-industrialization events in the 20th Century seem to lend some weight to this interpretation.

From this perspective, it's clear that now is the time to start putting together the pieces of a new institutional framework -- an "operating system" for the AI era -- that can adapt as fast as the technologies it seeks to govern.

This isn't about stifling innovation; it's about ensuring that the digital economy continues to give humanity, as a whole more, than it takes. Where each transaction, each interaction, builds rather than extracts value.

In this Tower of Babel scenario, proactive regulation isn't just a stopgap; it's an essential tool to bridge the space between where we are and where we need to be. Over the longer term, if we can design these new “operating systems” correctly we could illuminate the path to a future of unprecedented progress and human wellbeing.

Not a bad option.